So far in this series of blog posts we’ve discussed picking a replacement monitoring solution and getting it up and running. This instalment will cover setting up the actual alerting rules for our customers’ servers, and going live with the new solution.

Kapacitor Alerts

As mentioned in previous posts, the portion of the TICK stack responsible for the actual alerting is Kapacitor. Put simply, Kapacitor takes metrics stored in the InfluxDB database, processes and transforms them, and then sends alerts based on configured thresholds. It can deal with both batches and streams of data, the difference is fairly clear from the names; batch data takes multiple data points as an input and looks at them as a whole. Streams accept a single point at a time, folding each new point into the mix and re-evaluating thresholds each time.

As we wanted to monitor servers constantly over large time periods, stream data was the obvious choice for our alerts.

We went through many iterations of out alerting scripts, known as TICK scripts, before mostly settling on what we have now. I’ll explain one of our “Critical” CPU alert scripts to show how things work (comments inline):

var critLevel = 80 // The CPU percentage we want to alert on

var critTime = 15 // How long the CPU percentage must be at the critLevel (in this case, 80) percentage before we alert

var critResetTime = 15 // How long the CPU percentage must be back below the critLevel (again, 80) before we reset the alert

stream // Tell Kapacitor that this alert is using stream data

|from()

.measurement('cpu') // Tell Kapacitor to look at the CPU data

|where(lambda: ("host" == '$reported_hostname') AND ("cpu" == 'cpu-total')) // Only look at the data for a particular server (more on this below)

|groupBy('host')

|eval(lambda: 100.0 - "usage_idle") // Calculate percentage of CPU used...

.as('cpu_used') // ... and save this value in it's own variable

|stateDuration(lambda: "cpu_used" >= critLevel) // Keep track of how long CPU percentage has been above the alerting threshold

.unit(1m) // Minutely resolution is enough for us, so we use minutes for our units

.as('crit_duration') // Store the number calculated above for later user

|stateDuration(lambda: "cpu_used" < critLevel) // The same as the above 3 lines, but for resetting the alert status

.unit(1m) .as('crit_reset_duration')

|alert() // Create an alert...

.id('CPU - {{ index .Tags "host" }}') // The alert title

.message('{{.Level}} - CPU Usage > ' + string(critLevel) + ' on {{ index .Tags "host" }}') // The information contained in the alert

.details('''

{{ .ID }}

{{ .Message }}

''')

.crit(lambda: "crit_duration" >= critTime) // Generate a critical alert when CPU percentage has been above the threshold for the specified amount of time

.critReset(lambda: "crit_reset_duration" >= critResetTime) // Reset the alert when CPU percentage has come back below the threshold for the right time

.stateChangesOnly() // Only send out information when an alert changes from normal to critical, or back again

.log('/var/log/kapacitor/kapacitor_alerts.log') // Record in a log file that this alert was generated / reset

.email() // Send the alert via email

|influxDBOut() // Write the alert data back into InfluxDB for later reference...

.measurement('kapacitor_alerts') // The name to store the data under

.tag('kapacitor_alert_level', 'critical') // Information on the alert

.tag('metric', 'cpu') // The type of alert that was generated

The above TICK script generates a “Critcal” level alert when the CPU usage on a given server has been above 80% for 15 minutes or more. Once it has alerted, the alert will not reset until the CPU usage has come back down below 80% for a further 15 minutes. Both the initial notification and the “close” notification are sent via email.

The vast majority of our TICK scripts are very similar to the above, with changes to monitor different metrics (memory, disk space, disk IO etc) with different threshold levels and times etc.

To load this TICK script into Kapacitor, we use the kapacitor command line interface. Here’s what we’d run:

kapacitor define example_server_cpu -type stream -tick cpu.tick -dbrp example_server.autogen

kapacitor enable example_server_cpu

This creates a Kapacitor alert with the name “example_server_cpu”, with the “stream” alert type, against a database and retention policy we specify.

In reality, we automate this process with another script. This also replaces the $reported_hostname slug with the actual hostname of the server we’re setting the alert up for.

Getting customer servers reporting

Now that we could actually alert on information coming into InfluxDB, it was time to get each of our customers’ servers reporting in. Since we have a large number of customer systems to monitor, installing and configuring Telegraf by hand was simply not an option. We used ansible to roll the configuration out to the servers that needed it which involved 12 different operating systems and 4 different configurations.

Here’s a list of the tasks that Ansible carries out for us:

- On our servers:

- Create a specific InfluxDB database for the customers server

- Create a locked down InfluxDB write only user for the server to send it’s data in with

- Add Grafana data source to link the database to the customer

- On the customers server:

- Setup the Telegraf repo to ensure it is updated

- Install Telegraf

- Configure Telegraf outputs to point to our endpoint with the correct server specific credentials

- Configure Telegraf inputs with all the metrics we want to capture

- Restart Telegraf to load the new configuration

The above should be pretty self-explanatory. Whilst every one of the above steps would be carried out for a new server, we wrote the Ansible files to allow for most of them to be run independently of one another. This means that in future we’d be able to, for example, include another input to report metrics on, with relative ease.

For those of you not familiar with Ansible, here’s an excerpt from one of the files. It places a Telegraf config file into the relevant directory on the server, and sets the file permissions to the values we want:

---

- name: Copy inputs config onto client

copy:

src: ../files/telegraf/telegraf_inputs.conf

dest: /etc/telegraf/telegraf.d/telegraf_inputs.conf

owner: root

group: root

mode: 0644

become: yes

With the use of more ansible we incorporated various different tasks into a single repository structure, did lots of testing, and then ran things against our customers’ servers. Shortly after, we had all of our customers’ servers reporting in. After making sure everything looked right, we created and enabled various alerts for each server. The process for this was to write a BASH script which looped over a list of our customers’ servers and the available alert scripts, and combined them so that we had alerts for the key metrics across all servers. The floodgates had been opened!

Summary

So, at the end of everything covered in the posts in this series, we had ourselves a very respectable New Relic replacement. We ran the two systems side by side for a few weeks and are very happy with the outcome. While what we have described here is a basic guide to setting the system up we have already started to make improvements way beyond the power we used to have. If any of them are exciting enough, there will be more blog posts coming your way, so make sure you come back soon.

We’re also hoping to open source all of our TICK scripts, ansible configs, and various other snippets used to tie everything together at some point, once they’ve been tidied up and improved a bit more. If you cannot wait that long and need them now, drop us a line and we’ll do our best to help you out.

I hope you’ve enjoyed this series. It was great of a project that the whole company took part in and that enabled us to provide an even better experience for our customers. Thanks for reading!

Replacement Server Monitoring

Feature image background by swadley licensed CC BY 2.0.

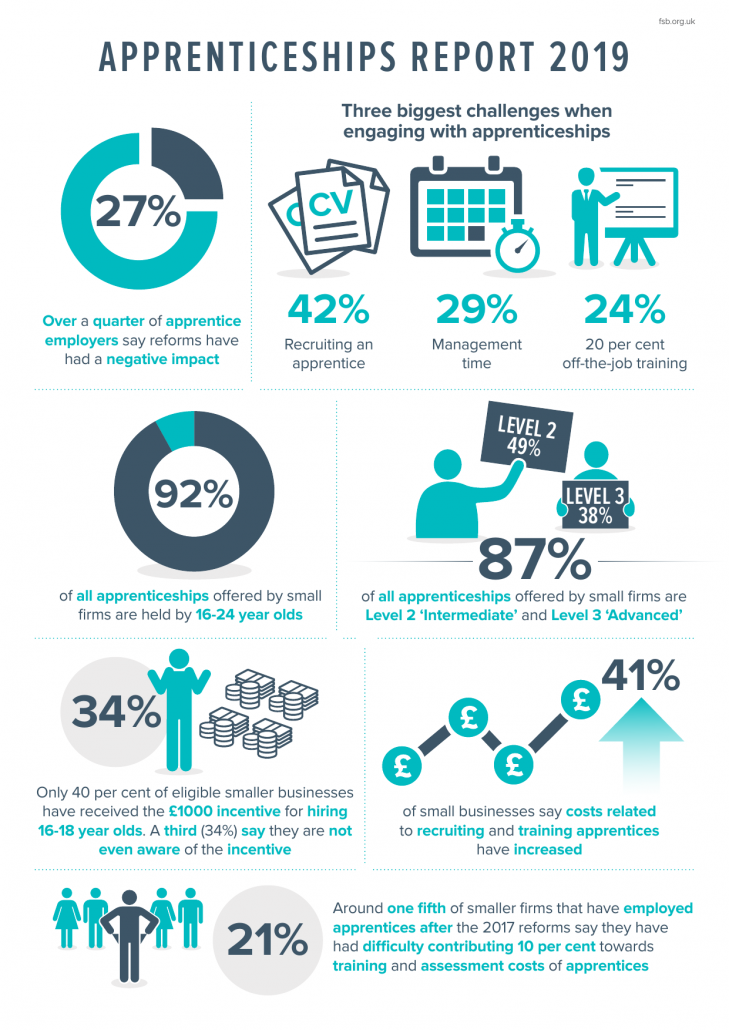

Dogsbody Technology are passionate about Apprenticeships, providing a new career opportunity to people and promoting an alternative to University for those leaving college.

Dogsbody Technology are passionate about Apprenticeships, providing a new career opportunity to people and promoting an alternative to University for those leaving college.